Terraform is an open source automated infrastructure orchestration tool, which uses the concept of “infrastructure as code” to manage infrastructure changes. Public cloud providers such as AWS, Google Cloud Platform (GCP), Azure, Alibaba Cloud, and Ucloud support it, as well as various community-supported providers have become a standard in the field of “infrastructure is code”.

Terraform has the following advantages:

Support multi-cloud deployment

Terraform is applicable to multi-cloud solutions. It deploys similar infrastructure to Alibaba Cloud, other cloud providers or local data centers. Developers can use the same tools and similar configuration files to manage the resources of different cloud providers at the same time.

Automated manage infrastructure

Terraform can create modules that can be reused to reduce deployment and management errors caused by human factors.

Infrastructure as Code

You can use code to manage maintenance resources. Allows you to save infrastructure state, enabling you to track changes to different components in your system (infrastructure as code) and share these configurations with others.

Reduce development costs

You reduce costs by creating development and deployment environments on demand. Also, you can evaluate before the system changes.

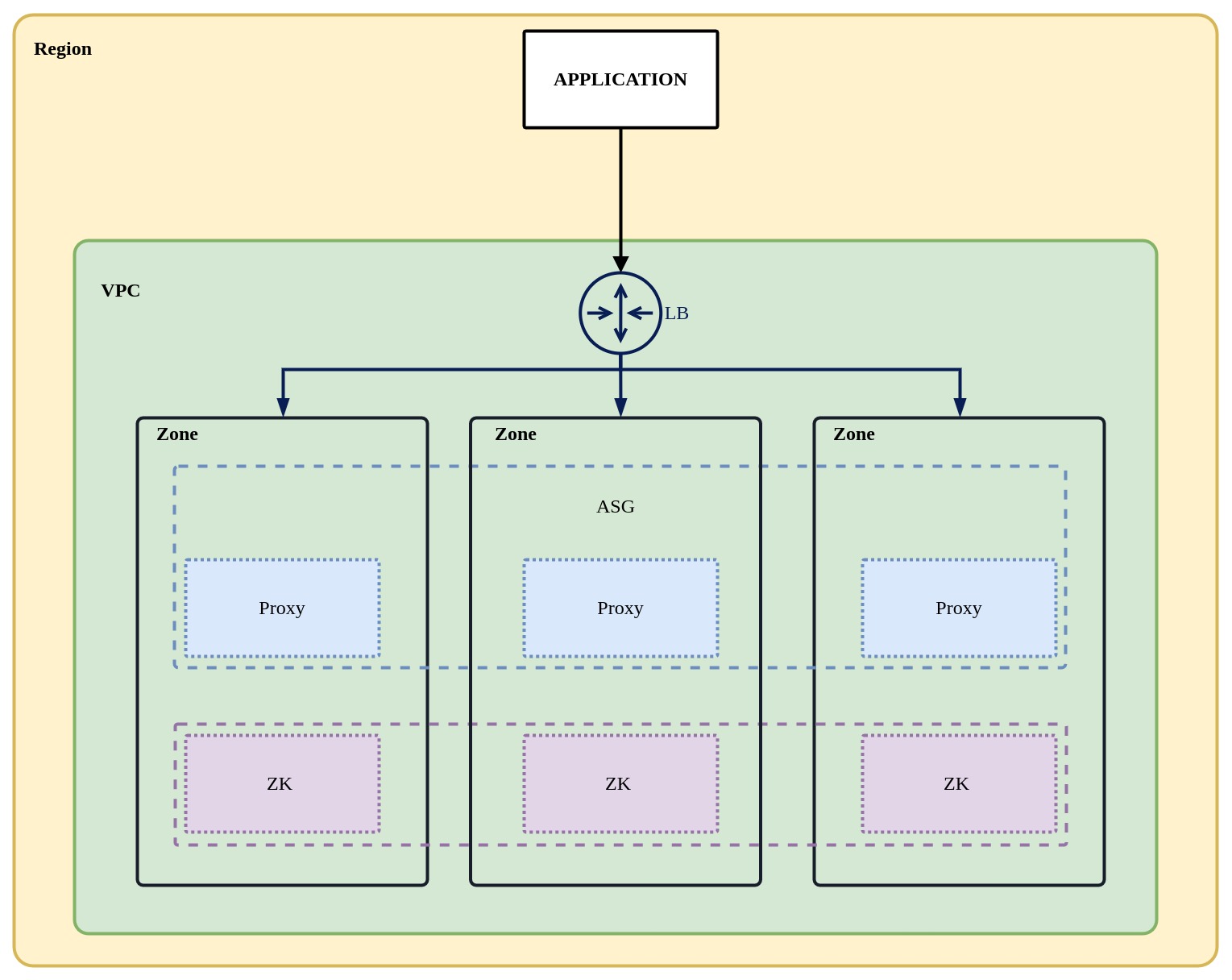

You can use Terraform to create a ShardingSphere high availability cluster on Amazon AWS. The cluster architecture is shown below. More cloud providers will be supported in the near future.

The Amazon resources created are the following:

To create a ShardingSphere Proxy highly available cluster, you need to prepare the following resources in advance:

Modify the parameters in main.tf according to the above prepared resources.

main.tf according to the above prepared resources.git clone --depth=1 https://github.com/apache/shardingsphere-on-cloud.git

cd shardingsphere-on-cloud/terraform/amazon

The commands mentioned below need to be executed in the ‘amazon’ directory.

terraform init

terraform plan

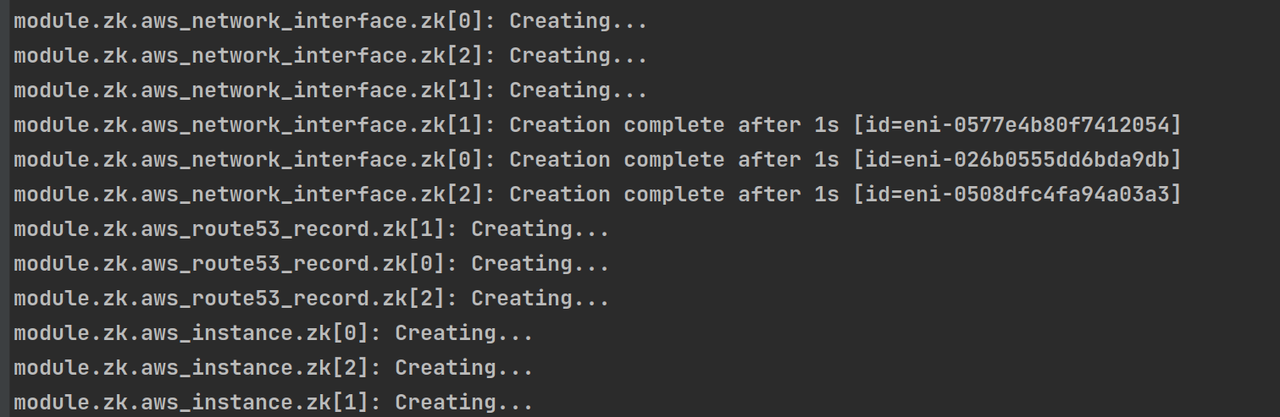

terraform apply

After creation, the following outputs will be available:

You need to record the value corresponding to shardingsphere_domain. Applications can access the proxy by connecting to the domain name.

terraform destroy

| Name | Version |

|---|---|

| aws | 4.37.0 |

| Name | Source |

|---|---|

| zk | ./zk |

| shardingsphere | ./shardingsphere |

| Name | Type | Description |

|---|---|---|

| shardingsphere_domain | string | The final SharidngSphere proxy domain name provided internally, through which other applications can connect to the proxy |

| zk_node_domain | list(string) | List of domain names corresponding to ZooKeeper service |

Internal resource list

| Name | Type |

|---|---|

| aws_instance.zk | resource |

| aws_network_interface.zk | resource |

| aws_route53_record.zk | resource |

| aws_ami.base | data source |

| aws_availability_zones.available | data source |

| aws_route53_zone.zone | data source |

Inputs

| Name | Description | Type | Default Value | Dependent on or not |

|---|---|---|---|---|

| cluster_size | Cluster size of the same number as the availability zone | number | n/a | yes |

| hosted_zone_name | Private zone name | string | “shardingsphere.org” | no |

| instance_type | EC2 instance type | string | n/a | yes |

| key_name | SSH key pair | string | n/a | yes |

| security_groups | Security Group list. 2181, 2888, 3888 ports must be released | list(string) | [] | no |

| subnet_ids | Subnet list sorted by AZ in VPC | list(string) | n/a | yes |

| tags | ZooKeeper Server instance tags The default is: Name=zk-${count.idx}" | map(any) | {} | no |

| vpc_id | VPC id | string | n/a | yes |

| zk_config | Default configuration of ZooKeeper Server | map | { “client_port”: 2181, “zk_heap”: 1024 }" |

no |

| zk_version | ZooKeeper Server version | string | “3.7.1” | no |

Outputs

| Name | Description |

|---|---|

| zk_node_domain | List of domain names corresponding to ZooKeeper Server |

| zk_node_private_ip | The intranet IP address of the ZooKeeper Server example |

Internal resource list

| Name | Type |

|---|---|

| aws_autoscaling_attachment.asg_attachment_lb | resource |

| aws_autoscaling_group.ss | resource |

| aws_launch_template.ss | resource |

| aws_lb.ss | resource |

| aws_lb_listener.ss | resource |

| aws_lb_target_group.ss_tg | resource |

| aws_network_interface.ss | resource |

| aws_route53_record.ss | resource |

| aws_availability_zones.available | data source |

| aws_route53_zone.zone | data source |

| aws_vpc.vpc | data source |

Inputs

| Name | Description | Type | Default Value | Dependent on or not |

|---|---|---|---|---|

| cluster_size | Cluster size of the same number as the availability zone | number | n/a | yes |

| hosted_zone_name | Private zone name | string | “shardingsphere.org” | no |

| image_id | AMI iamge ID | string | n/a | yes |

| instance_type | EC2 instance type | string | n/a | yes |

| key_name | SSH key pair | string | n/a | yes |

| lb_listener_port | ShardingSphere Proxy startup port | string | n/a | yes |

| security_groups | Security Group list | list(string) | [] | no |

| shardingsphere_version | ShardingSphere Proxy version | string | n/a | yes |

| subnet_ids | Subnet list sorted by AZ in VPC | list(string) | n/a | yes |

| vpc_id | VPC ID | string | n/a | yes |

| zk_servers | Zookeeper Servers | list(string) | n/a | yes |

Outputs

| Name | Description |

|---|---|

| shardingsphere_domain | The domain name provided by the shardingSphere Proxy cluster. Other applications can connect to the proxy through this domain name. |

By default, ZooKeeper and ShardingSphere Proxy services created using our Terraform configuration can be managed using systemd.

systemctl start zookeeper

systemctl stop zookeeper

systemctl restart zookeeper

systemctl start shardingsphere-proxy

systemctl stop shardingsphere-proxy

systemctl restart shardingsphere-proxy