ShardingSphere 5.3.0 is released: new features and improvements

After 1.5 months in development, Apache ShardingSphere 5.3.0 is released. Our community merged 687 PRs from contributors around the world.

The new release has been improved in terms of features, performance, testing, documentation, examples, etc.

The 5.3.0 release brings the following highlights:

- Support fuzzy query for CipherColumn.

- Support Datasource-level heterogeneous database.

- Support checkpoint resume for data consistency check.

- Automatically start a distributed transaction, while executing DML statements across multiple shards.

Additionally, release 5.3.0 also brings the following adjustments:

- Remove the Spring configuration.

- Systematically refactor the DistSQL syntax.

- Refactor the configuration format of ShardingSphere-Proxy.

Highlights

1. Support fuzzy query for CipherColumn

In previous versions, ShardingSphere’s Encrypt feature didn’t support the use of the LIKE operator in SQL.

For a while users strongly requested adding the LIKE operator to the Encrypt feature. Usually, encrypted fields are mainly of the string type, and it is a common practice for the string to execute LIKE.

To minimize friction in accessing the Encrypt feature, our community has initiated a discussion about the implementation of encrypted LIKE.

Since then, we’ve received a lot of feedback.

Some community members even contributed their original encryption algorithm implementation supporting fuzzy queries after fully investigating conventional solutions.

🔗 The relevant issue can be found here.

🔗 For the algorithm design, please refer to the attachment within the issue.

The [single-character abstract algorithm] contributed by the community members is implemented as CHAR_DIGEST_LIKE in the ShardingSphere encryption algorithm SPI.

2. Support datasource-level heterogeneous database

ShardingSphere supports a database gateway, but its heterogeneous capability is limited to the logical database in previous versions. This means that all the data sources under a logical database must be of the same database type.

This new release supports datasource-level heterogeneous databases at the kernel level. This means the datasources under a logical database can be different database types, allowing you to use different databases to store data.

Combined with ShardingSphere’s SQL dialect conversion capability, this new feature significantly enhances ShardingSphere’s heterogeneous data gateway capability.

3. Data migration: support checkpoint resume for data consistency check

Data consistency checks happen at the later stage of data migration.

Previously, the data consistency check was triggered and stopped by DistSQL. If a large amount of data was migrated and the data consistency check was stopped for any reason, the check would’ve had to be started again — which is sub-optimal and affects user experience.

ShardingSphere 5.3.0 now supports checkpoint storage, which means data consistency checks can be resumed from the checkpoint.

For example, if data is being verified during data migration and the user stops the verification for some reason, with the verification progress (finished_percentage) being 5%, then:

mysql> STOP MIGRATION CHECK 'j0101395cd93b2cfc189f29958b8a0342e882';

Query OK, 0 rows affected (0.12 sec)

mysql> SHOW MIGRATION CHECK STATUS 'j0101395cd93b2cfc189f29958b8a0342e882';

+--------+--------+---------------------+-------------------+-------------------------+-------------------------+------------------+---------------+

| tables | result | finished_percentage | remaining_seconds | check_begin_time | check_end_time | duration_seconds | error_message |

+--------+--------+---------------------+-------------------+-------------------------+-------------------------+------------------+---------------+

| sbtest | false | 5 | 324 | 2022-11-10 19:27:15.919 | 2022-11-10 19:27:35.358 | 19 | |

+--------+--------+---------------------+-------------------+-------------------------+-------------------------+------------------+---------------+

1 row in set (0.02 sec)

In this case, the user restarts the data verification. But the work does not have to restart from the beginning. The verification progress (finished_percentage) remains at 5%.

mysql> START MIGRATION CHECK 'j0101395cd93b2cfc189f29958b8a0342e882';

Query OK, 0 rows affected (0.35 sec)

mysql> SHOW MIGRATION CHECK STATUS 'j0101395cd93b2cfc189f29958b8a0342e882';

+--------+--------+---------------------+-------------------+-------------------------+----------------+------------------+---------------+

| tables | result | finished_percentage | remaining_seconds | check_begin_time | check_end_time | duration_seconds | error_message |

+--------+--------+---------------------+-------------------+-------------------------+----------------+------------------+---------------+

| sbtest | false | 5 | 20 | 2022-11-10 19:28:49.422 | | 1 | |

+--------+--------+---------------------+-------------------+-------------------------+----------------+------------------+---------------+

1 row in set (0.02 sec)

Limitation: this new feature is unavailable with the CRC32_MATCH algorithm because the algorithm calculates all data at once.

4. Automatically start a distributed transaction while executing DML statements across multiple shards

Previously, even with XA and other distributed transactions configured, ShardingSphere could not guarantee the atomicity of DML statements that are routed to multiple shards — if users didn’t manually enable the transaction.

Take the following SQL as an example:

insert into account(id, balance, transaction_id) values

(1, 1, 1),(2, 2, 2),(3, 3, 3),(4, 4, 4),

(5, 5, 5),(6, 6, 6),(7, 7, 7),(8, 8, 8);

When this SQL is sharded according to id mod 2, the ShardingSphere kernel layer will automatically split it into the following two SQLs and route them to different shards respectively for execution:

insert into account(id, balance, transaction_id) values

(1, 1, 1),(3, 3, 3),(5, 5, 5),(7, 7, 7);

insert into account(id, balance, transaction_id) values

(2, 2, 2),(4, 4, 4),(6, 6, 6),(8, 8, 8);

If the user does not manually start the transaction, and one of the sharded SQL fails to execute, the atomicity cannot be guaranteed because the successful operation cannot be rolled back.

ShardingSphere 5.3.0 is optimized in terms of distributed transactions. If distributed transactions are configured in ShardingSphere, they can be automatically started when DML statements are routed to multiple shards. This way, we can ensure atomicity when executing DML statements.

Significant Adjustments

1. Remove Spring configuration

In earlier versions, ShardingSphere-JDBC provided services in the format of DataSource. If you wanted to introduce ShardingSphere-JDBC without modifying the code in the Spring/Spring Boot project, you needed to use modules such as Spring/Spring Boot Starter provided by ShardingSphere.

Although ShardingSphere supports multiple configuration formats, it also has the following problems:

- When API changes, many config files need to be adjusted, which is a heavy workload.

- The community has to maintain multiple config files.

- The lifecycle management of Spring bean is susceptible to other dependencies of the project such as PostProcessor failure.

- Spring Boot Starter and Spring NameSpace are affected by Spring, and their configuration styles are different from YAML.

- Spring Boot Starter and Spring NameSpace are affected by the version of Spring. When users access them, the configuration may not be identified and dependency conflicts may occur. For example, Spring Boot versions 1.x and 2.x have different configuration styles.

ShardingSphere 5.1.2 first supported the introduction of ShardingSphere-JDBC in the form of JDBC Driver. That means applications only need to configure the Driver provided by ShardingSphere at the JDBC URL before accessing to ShardingSphere-JDBC.

Removing the Spring configuration simplifies and unifies the configuration mode of ShardingSphere. This adjustment not only simplifies the configuraiton of ShardingSphere when using different configuration modes, but also reduces maintenance work for the ShardingSphere community.

2. Systematically refactor the DistSQL syntax

One of the characteristics of Apache ShardingSphere is its flexible rule configuration and resource control capability.

DistSQL (Distributed SQL) is ShardingSphere’s SQL-like operating language. It’s used the same way as standard SQL, and is designed to provide incremental SQL operation capability.

ShardingSphere 5.3.0 systematically refactors DistSQL. The community redesigned the syntax, semantics and operating procedure of DistSQL. The new version is more consistent with ShardingSphere’s design philosophy and focuses on a better user experience.

Please refer to the latest ShardingSphere documentation for details. A DistSQL roadmap will be available soon, and you’re welcome to leave your feedback.

3. Refactor the configuration format of ShardingSphere-Proxy

In this update, ShardingSphere-Proxy has adjusted the configuration format and reduced config files required for startup.

server.yaml before refactoring:

rules:

- !AUTHORITY

users:

- root@%:root

- sharding@:sharding

provider:

type: ALL_PERMITTED

- !TRANSACTION

defaultType: XA

providerType: Atomikos

- !SQL_PARSER

sqlStatementCache:

initialCapacity: 2000

maximumSize: 65535

parseTreeCache:

initialCapacity: 128

maximumSize: 1024

server.yaml after refactoring:

authority:

users:

- user: root@%

password: root

- user: sharding

password: sharding

privilege:

type: ALL_PERMITTED

transaction:

defaultType: XA

providerType: Atomikos

sqlParser:

sqlStatementCache:

initialCapacity: 2000

maximumSize: 65535

parseTreeCache:

initialCapacity: 128

maximumSize: 1024

In ShardingSphere 5.3.0, server.yaml is no longer required to start Proxy. If no config file is provided by default, Proxy provides the default account root/root.

ShardingSphere is completely committed to becoming cloud native. Thanks to DistSQL, ShardingSphere-Proxy’s config files can be further simplified, which is more friendly to container deployment.

Release Notes

API Changes

- DistSQL: refactor syntax API, please refer to the user manual

- Proxy: change the configuration style of global rule, remove the exclamation mark

- Proxy: allow zero-configuration startup, enable the default account root/root when there is no Authority configuration

- Proxy: remove the default

logback.xmland use API initialization - JDBC: remove the Spring configuration and use Driver + YAML configuration instead

Enhancements

- DistSQL: new syntax

REFRESH DATABASE METADATA, refresh logic database metadata - Kernel: support DistSQL

REFRESH DATABASE METADATAto load configuration from the governance center and rebuildMetaDataContext - Support PostgreSQL/openGauss setting transaction isolation level

- Scaling: increase inventory task progress update frequence

- Scaling:

DATA_MATCHconsistency check support checkpoint resume - Scaling: support drop consistency check job via DistSQL

- Scaling: rename column from

sharding_total_counttojob_item_countin job list DistSQL response - Scaling: add sharding column in incremental task SQL to avoid broadcast routing

- Scaling: sharding column could be updated when generating SQL

- Scaling: improve column value reader for

DATA_MATCHconsistency check - DistSQL: encrypt DistSQL syntax optimization, support like query algorithm

- DistSQL: add properties value check when

REGISTER STORAGE UNIT - DistSQL: remove useless algorithms at the same time when

DROP RULE - DistSQL:

EXPORT DATABASE CONFIGURATIONsupports broadcast tables - DistSQL:

REGISTER STORAGE UNITsupports heterogeneous data sources - Encrypt: support

EncryptLIKE feature - Automatically start distributed transactions when executing DML statements across multiple shards

- Kernel: support

client \dfor PostgreSQL and openGauss - Kernel: support select group by, order by statement when column contains null values

- Kernel: support parse

RETURNINGclause of PostgreSQL/openGauss Insert - Kernel: SQL

HINTperformance improvement - Kernel: support mysql case when then statement parse

- Kernel: support data source level heterogeneous database gateway

- (Experimental) Sharding: add sharding cache plugin

- Proxy: support more PostgreSQL datetime formats

- Proxy: support MySQL

COM_RESET_CONNECTION - Scaling: improve

MySQLBinlogEventType.valueOfto support unknown event type - Kernel: support case when for federation

Bug Fix

- Scaling: fix barrier node created at job deletion

- Scaling: fix part of columns value might be ignored in

DATA_MATCHconsistency check - Scaling: fix jdbc url parameters are not updated in consistency check

- Scaling: fix tables sharding algorithm type

INLINEis case-sensitive - Scaling: fix incremental task on MySQL require mysql system database permission

- Proxy: fix the NPE when executing select SQL without storage node

- Proxy: support

DATABASE_PERMITTEDpermission verification in unicast scenarios - Kernel: fix the wrong value of

worker-idin show compute nodes - Kernel: fix route error when the number of readable data sources and weight configurations of the Weight algorithm are not equal

- Kernel: fix multiple groups of readwrite-splitting refer to the same load balancer name, and the load balancer fails problem

- Kernel: fix can not disable and enable compute node problem

- JDBC: fix data source is closed in ShardingSphereDriver cluster mode when startup problem

- Kernel: fix wrong rewrite result when part of logical table name of the binding table is consistent with the actual table name, and some are inconsistent

- Kernel: fix startup exception when use SpringBoot without configuring rules

- Encrypt: fix null pointer exception when Encrypt value is null

- Kernel: fix oracle parsing does not support varchar2 specified type

- Kernel: fix serial flag judgment error within the transaction

- Kernel: fix cursor fetch error caused by

wasNullchange - Kernel: fix alter transaction rule error when refresh metadata

- Encrypt: fix

EncryptRulecast toTransparentRuleexception that occurs when the call procedure statement is executed in theEncryptscenario - Encrypt: fix exception which caused by

ExpressionProjectionin shorthand projection - Proxy: fix PostgreSQL Proxy int2 negative value decoding incorrect

- Proxy: PostgreSQL/openGauss support describe insert returning clause

- Proxy: fix gsql 3.0 may be stuck when connecting Proxy

- Proxy: fix parameters are missed when checking SQL in Proxy backend

- Proxy: enable MySQL Proxy to encode large packets

- Kernel: fix oracle parse comment without whitespace error

- DistSQL: fix show create table for encrypt table

Refactor

- Scaling: reverse table name and column name when generating SQL if it’s SQL keyword

- Scaling: improve incremental task failure handling

- Kernel: governance center node adjustment, unified hump to underscore

Links

Community Contribution

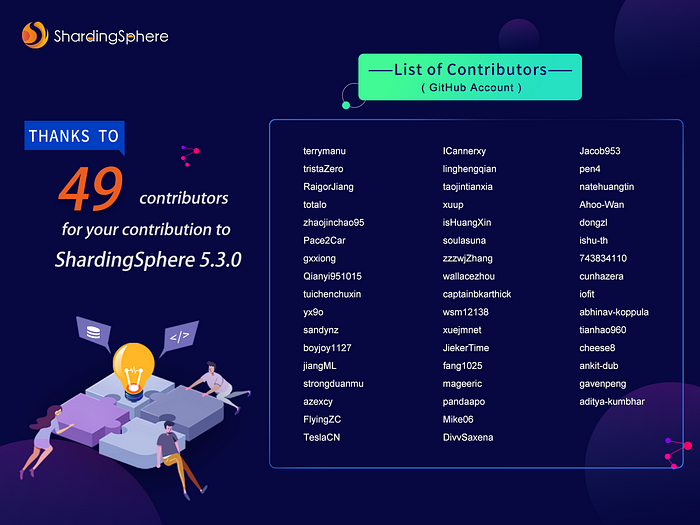

This Apache ShardingSphere 5.3.0 release is the result of 687 merged PRs, committed by 49 contributors. Thank you for your efforts.